AMD MI325X's Dilemma: Performance Gem, Why the Market Response is Tepid?

In the fiercely competitive AI chip market, the rivalry between AMD and NVIDIA has always been a focal point of industry attention. With AMD's release of the MI325X AI chip, claiming superior performance over NVIDIA's H200 GPU, this competition has escalated once again. However, the market's response to this rivalry has been relatively subdued, even resulting in a decline in stock prices, which warrants further reflection.

Introduction

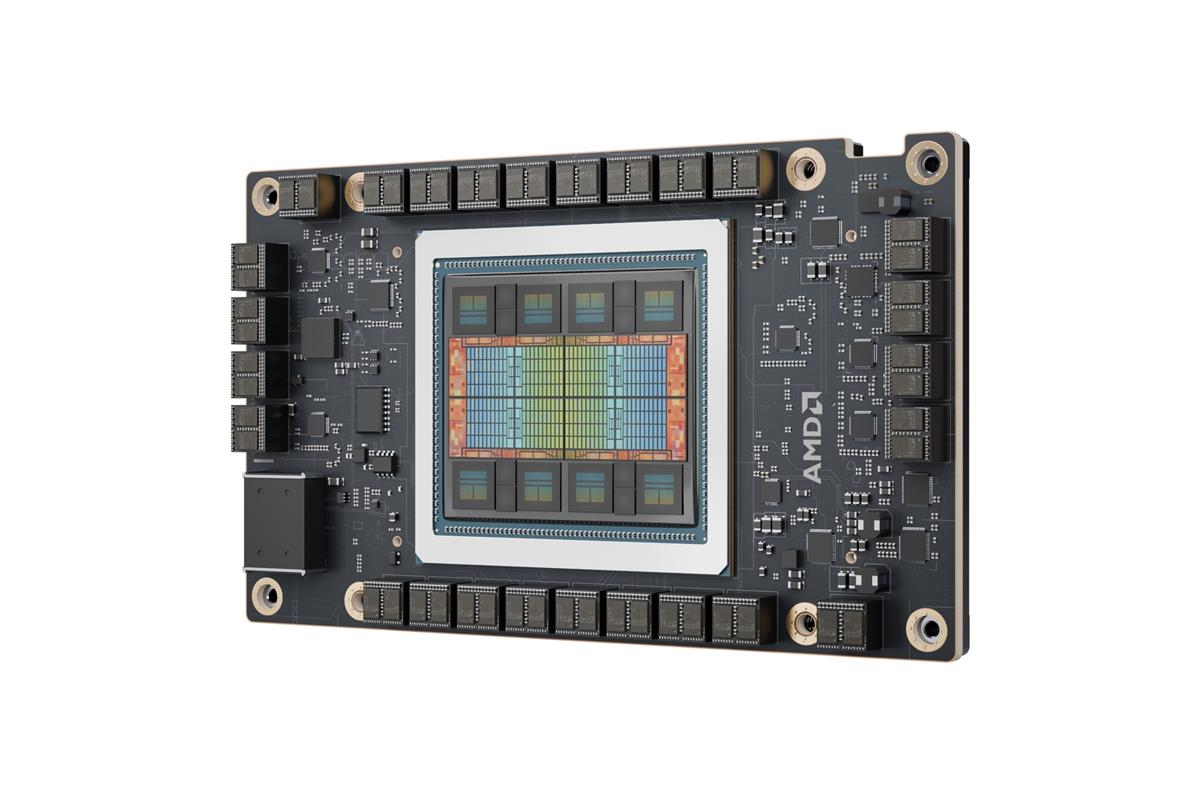

The competition in the AI chip market is intensifying, with AMD's newly released MI325X AI chip demonstrating significant advantages in computing power, energy efficiency, and memory bandwidth. Based on the CDNA 3 architecture, it is the first to adopt HBM3E high-bandwidth memory, providing up to 6TB/s of memory bandwidth and a maximum memory capacity of 256GB, marking a significant improvement compared to its predecessor, the MI300X GPU. At FP8 and FP16 precisions, the MI325X achieves peak theoretical performances of 2.6 PF and 1.3 PF, respectively, theoretically outperforming NVIDIA's H200 by 30% in FP16 computing power, as the H200, with 141GB of HBM3e memory and 4.8TB/s of memory bandwidth, remains on par with the H100 in this regard. Furthermore, the MI325X's architecture design enables it to offer higher efficiency and throughput when handling tasks in areas such as large language model training, recommendation systems, and computer vision. Its high memory bandwidth and computing power make it an ideal choice for scenarios like large language model training.

Technical Comparison: What Sets AMD's MI325X Apart?

● Performance Comparison

AMD's MI325X AI chip stands out in computing power, energy efficiency, and memory bandwidth. Built on the CDNA 3 architecture, it utilizes HBM3E high-bandwidth memory for the first time, offering up to 6TB/s of memory bandwidth and a maximum memory capacity of 256GB. At FP8 and FP16 precisions, the MI325X achieves peak theoretical performances of 2.6 PF and 1.3 PF, respectively, theoretically surpassing NVIDIA's H200 in performance. While NVIDIA's H200 GPU boasts 141GB of HBM3e memory and 4.8TB/s of memory bandwidth, it lags behind the MI325X by 30% in FP16 computing power.

● Architecture Advantages

AMD's CDNA 3 architecture is specifically optimized for AI tasks, demonstrating powerful performance in matrix operations and sparse computing. The MI325X's architecture design enables it to provide higher efficiency and throughput when processing tasks in areas such as large language model training, recommendation systems, and computer vision.

● Application Scenarios

In scenarios like large language model training, the MI325X's high memory bandwidth and computing power make it an ideal choice. Additionally, the MI325X is expected to outperform the H200 by up to 1.4 times in inference performance, which is particularly important in AI applications.

Why the Lackluster Market Response?

1. Ecosystem Shortcomings: Despite AMD's hardware performance breakthroughs, the market's lukewarm response is mainly due to its shortcomings in the AI software ecosystem, developer tools, and community support. NVIDIA's CUDA platform has established a vast developer community, while AMD's ROCm platform, although continuously improving, still needs time to catch up. Furthermore, AMD's long-standing disadvantage in the GPU market has influenced developers' choices, as many developers and businesses have become accustomed to using NVIDIA's products and are dependent on its software ecosystem. These factors collectively contributed to the market's indifference to AMD's new product release.

2. Historical Burden: AMD's long-standing disadvantage in the GPU market has influenced developers' preferences, as many have become accustomed to using NVIDIA's products and are reliant on its software ecosystem.

3. Customer Loyalty: NVIDIA's strong customer base in areas such as data centers and AI research institutions provides it with significant market stickiness. These customers' loyalty to NVIDIA's products, along with considerations of potential costs and risks associated with migrating to a new platform, makes them unlikely to switch to AMD's products easily.

Ecosystem Development: How AMD Can Break Through?

● ROCm Open Source Project: AMD's ROCm platform is an open-source software ecosystem designed to support the HPC and supercomputing markets. Although ROCm has been in the adjustment phase with mainstream AI development platforms over the past year, AMD is continuously strengthening and upgrading ROCm to improve its training effectiveness and attract more developers and partners.

● Developer Incentive Programs: To attract developers and cultivate an ecosystem, AMD may adopt a series of measures, including providing more technical support, training resources, and collaboration opportunities to encourage developers to adopt its platform.

● Partnerships with Collaborators: AMD is establishing closer partnerships with cloud service providers, server manufacturers, and others to promote the adoption of its AI chips. Through these collaborations, AMD can provide broader application scenarios and support for its chips.

Industry Trends and Future Outlook

The AI chip market is expected to continue to grow, with the continuous development and popularization of AI technology increasing the demand for high-performance, low-power AI chips. Furthermore, the competition between AMD and NVIDIA will drive innovation and development throughout the AI industry. As both companies continue to introduce new technologies and products, the industry will continuously emerge with new technologies and products, including more efficient algorithms, more advanced chip manufacturing technologies, and more powerful computing capabilities. The market will have more choices and higher expectations, ultimately driving the progress of AI technology and the popularization of its applications.

AMD faces opportunities in the AI chip market, including its breakthroughs in hardware performance and continuous investment in software ecosystems. However, the challenges are also apparent, including overcoming NVIDIA's dominant market position and customer loyalty, as well as establishing a developer community and software ecosystem that rivals NVIDIA's.

Conclusion

While AMD's MI325X demonstrates strong competitiveness in performance, it still faces challenges in ecosystem development, market acceptance, and customer loyalty. AMD needs to increase its investments and efforts in these areas to achieve a true breakthrough in the AI chip market. Meanwhile, this competition will also drive the development of the entire AI industry, providing impetus for future technological innovations and the expansion of application scenarios.

Conevo Daily Hot IC Parts Search

● The AT21CS01-STUM10-T from Microchip Technology is a 1-Kbit (128x8) serial EEPROM memory based on the I2C protocol with a unique factory-programmed 64-bit serial number. With its unique functions and features, the AT21CS01-STUM10-T provides users with a reliable data storage solution.

● CP2102N-A02-GQFN24R is a USB to UART bridge controller produced by Silicon Laboratories in the United States, which can quickly convert the USB interface to the UART interface, and is widely used in various occasions that require USB communication.

● The DRV8870DDAR is a motor driver from Texas Instruments (TI). Its operating voltage range is 6.5V to 45V, which is suitable for a variety of application scenarios with different voltage requirements, and can provide stable driving power for various types of motors.

Website: www.conevoelec.com

Email: info@conevoelec.com