New Trends in Low-Power, High-Efficiency AI Chips Driven by DeepSeek

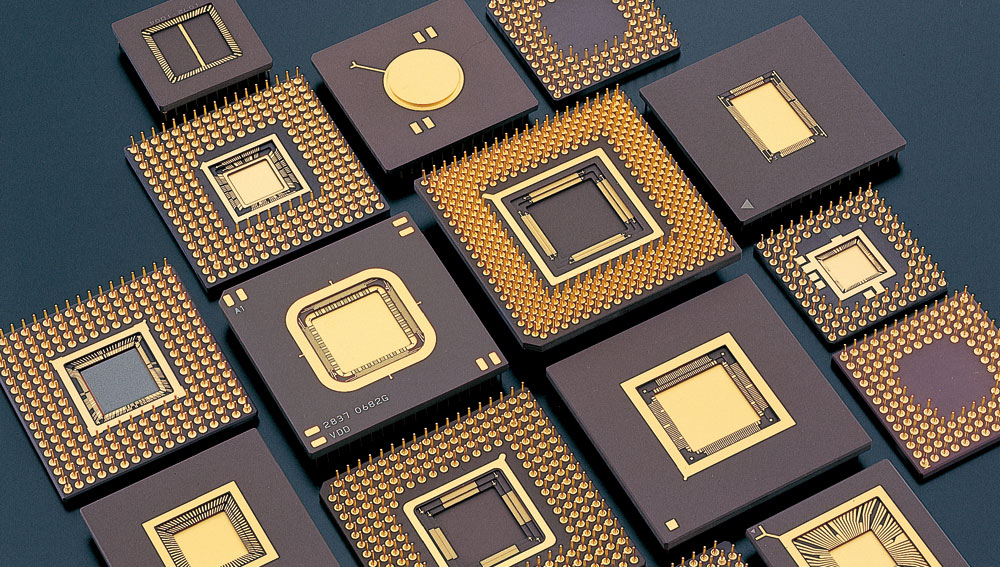

In the rapidly evolving field of artificial intelligence, the DeepSeek model is emerging as a significant force, profoundly influencing the development trajectory of AI chips. With its exceptional capabilities in language understanding and generation, DeepSeek has demonstrated remarkable performance in natural language processing applications such as intelligent customer service, text creation, and smart translation, delivering efficient and intelligent services to users. The model's operation demands extensive data processing and complex computations, placing stringent requirements on hardware, particularly AI chips in the semiconductor industry.

The Impact of DeepSeek on the Semiconductor Industry

The deployment of DeepSeek is significantly reshaping the semiconductor landscape. Notably, Nvidia's H20 chip has seen a surge in orders in the Chinese market, driven by the rapid rise of DeepSeek technology. According to industry insiders, since DeepSeek entered the global spotlight, tech giants like Tencent, Alibaba, and ByteDance have significantly increased their orders for H20 chips. Even smaller companies in sectors like healthcare and education are procuring AI servers equipped with DeepSeek models and H20 chips. Analysts estimate that Nvidia's H20 shipments reached approximately 1 million units in 2024, generating over $12 billion in revenue. This trend underscores Nvidia's dominant position in the AI chip market and highlights how the widespread application of DeepSeek is driving demand for high-performance chips.

Growing Demand for Low-Power, High-Efficiency Chips

As the scale and complexity of AI models continue to grow, traditional high-power chips are becoming inadequate for long-term, large-scale computations. The future of AI chips lies in low-power solutions to reduce energy costs and thermal management challenges. For instance, new semiconductor materials such as gallium nitride (GaN) and silicon carbide (SiC) offer higher electron mobility and lower power consumption, making them promising candidates for broader application in AI chips, thereby significantly enhancing chip efficiency. Additionally, integrated computing and storage technologies (such as near-memory and in-memory computing) reduce the overhead of data transfer, further improving energy efficiency.

In edge computing scenarios, the demand for low-power, high-efficiency AI chips is rapidly increasing. Edge devices, including smart homes, intelligent security cameras, and wearable devices, typically rely on battery power or limited energy supplies and require real-time data processing. This necessitates AI chips with low power consumption and high efficiency to extend device runtime and enable quick responses. For example, the CUBE solution integrates SoC with memory using 2.5D or 3D packaging technology, achieving ultra-high bandwidth with up to 1024 I/Os. This makes it an important enabler for lightweight edge AI applications.

Moreover, the WiseEye2 AI Processor, with its multi-layer power management mechanism and multi-core architecture, has significantly improved inference speed and efficiency while reducing power consumption. WiseEye2 is suitable for applications requiring immediate response and rapid decision-making, such as industrial quality inspection, predictive maintenance, and autonomous driving. It is also ideal for applications that prioritize energy efficiency and compact form factors, such as wearable devices.

Potential and Development Trends of Specialized Chips

Specialized chips (ASICs) hold tremendous potential in the field of artificial intelligence. Compared to general-purpose GPUs, ASICs can be optimized for specific algorithms, thereby greatly improving energy efficiency. For example, Amazon's Inferentia inference chip has achieved a 2.3-fold performance increase over general-purpose GPUs, with a 70% reduction in inference costs. In the future, as new computing technologies such as photonic chips develop, the energy efficiency of AI chips will be further enhanced.

At the same time, although Nvidia's H20 chip may be outperformed by some domestic chips on paper, it still holds an important position in practical applications due to its HBM memory advantage and powerful CUDA ecosystem. This ecosystem advantage leads many enterprises to prioritize Nvidia's solutions when deploying DeepSeek models. This further illustrates that the rise of specialized chips depends not only on hardware performance improvements but also on robust software ecosystem support.

Compatibility of Different Chips with Intelligent Models

Different types of AI chips have their own strengths and weaknesses when adapting to the DeepSeek model. GPUs, with their powerful parallel computing capabilities, have excelled in deep learning training and inference and remain the mainstream AI computing chips. However, as models continue to evolve, their high power consumption and relatively low energy efficiency ratio are gradually becoming bottlenecks. NPUs (Neural Processing Units), designed specifically for neural network computing, offer advantages in energy efficiency and computing speed, making them well-suited for inference applications of the DeepSeek model, enabling efficient inference operations at low power. TPUs (Tensor Processing Units) have unique advantages in handling tensor operations and can provide efficient support for large-scale matrix computations in DeepSeek models.

Impact of Big Data Intelligent Models on Chip Designers

The development of DeepSeek models has had a profound impact on chip designers. On one hand, it has prompted chip designers to increase R&D investment and continuously innovate technologies to meet the growing demand for AI computing. For example, domestic GPU companies such as Huawei Ascend and MuXi have actively adapted DeepSeek large models, achieving performance on par with global high-end GPUs through hardware-software co-optimization, accelerating the deployment of edge-side AI. In addition, companies such as Biren Technology and Kunlunxin have also completed the adaptation of DeepSeek models, demonstrating excellent performance and cost efficiency.

On the other hand, it has driven chip designers to explore new chip architectures and application scenarios, promoting technological upgrades and industrial transformation across the entire industry. For example, PIMCHIP has developed specialized chips for large model inference based on heterogeneous parallel computing architectures. Through hardware-software co-optimization, these chips have significantly improved inference performance for large models. The PIMCHIP-S300 series, leveraging integrated computing and storage architecture, has achieved high energy efficiency, small form factor, low power consumption, and low cost, with a computing core efficiency ratio as high as 27TOPS/W. Additionally, these chips feature ultra-low-power wake-up, voice recognition, and motion monitoring capabilities, saving up to 90% of energy for specific computing tasks.

Conclusion

Driven by DeepSeek models, the AI chip industry is moving towards low-power and high-efficiency solutions. The growing demand for low-power, high-efficiency chips in edge computing scenarios, coupled with the potential of specialized chips, presents new opportunities and challenges for chip designers. As technology continues to advance, AI chips will achieve greater breakthroughs in energy efficiency, computational density, and cost control, providing stronger support for the widespread application of artificial intelligence.

Website: www.conevoelec.com

Email: info@conevoelec.com